GPT-J: a 6 Billion Parameter Open-Source GPT-3 Model

We've all heard of GPT-3 – a text generation model created by OpenAI that has shocked the world with its text generation abilities. Just check out this article that the Guardian published that was written by GPT-3. It's quite clear that GPT-3 has astonishing text generation capabilities. But, OpenAI decided to only provide access to the model through their API, which you need to apply for and is currently waitlisted.

EleutherAI has been working hard on publishing open-sourced versions of GPT-3. Until this week, the largest model they've produced has 2.7B parameters (GPT-Neo-2.7B). In comparison, the largest GPT-3 model has 175B parameters. But now, they've just published a model that has 6B parameters called GPT-J-6B. It performs similarly to the GPT-3 model of the equivalent size and is one step closer towards a full-size GPT-3 model being available for anyone to download.

GPT-J is the best-performing publicly available Transformer LM in terms of zero-shot performance on various down-streaming tasks. – Aran Komatsuzaki (Researcher at EleutherAI)

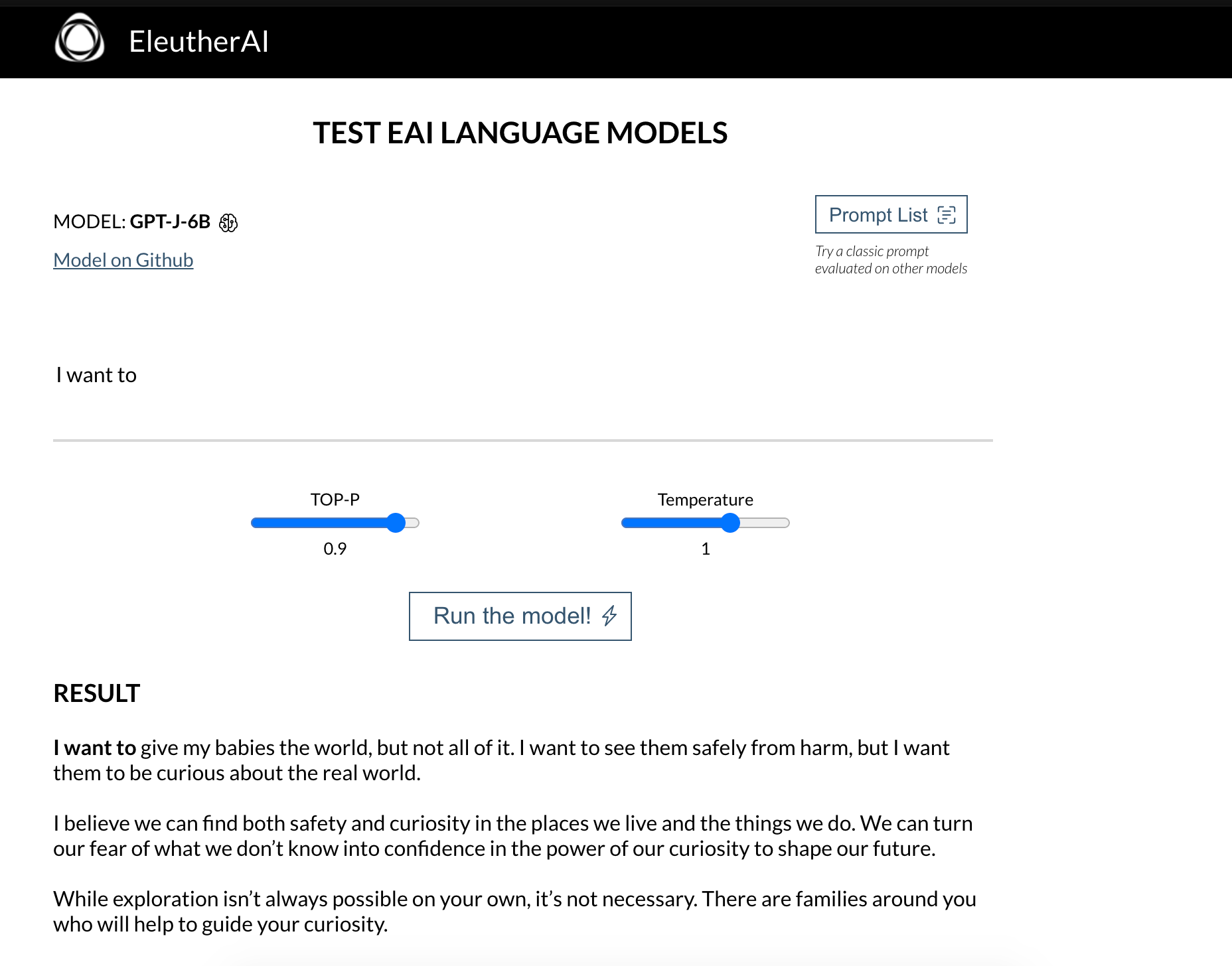

Demo Web App

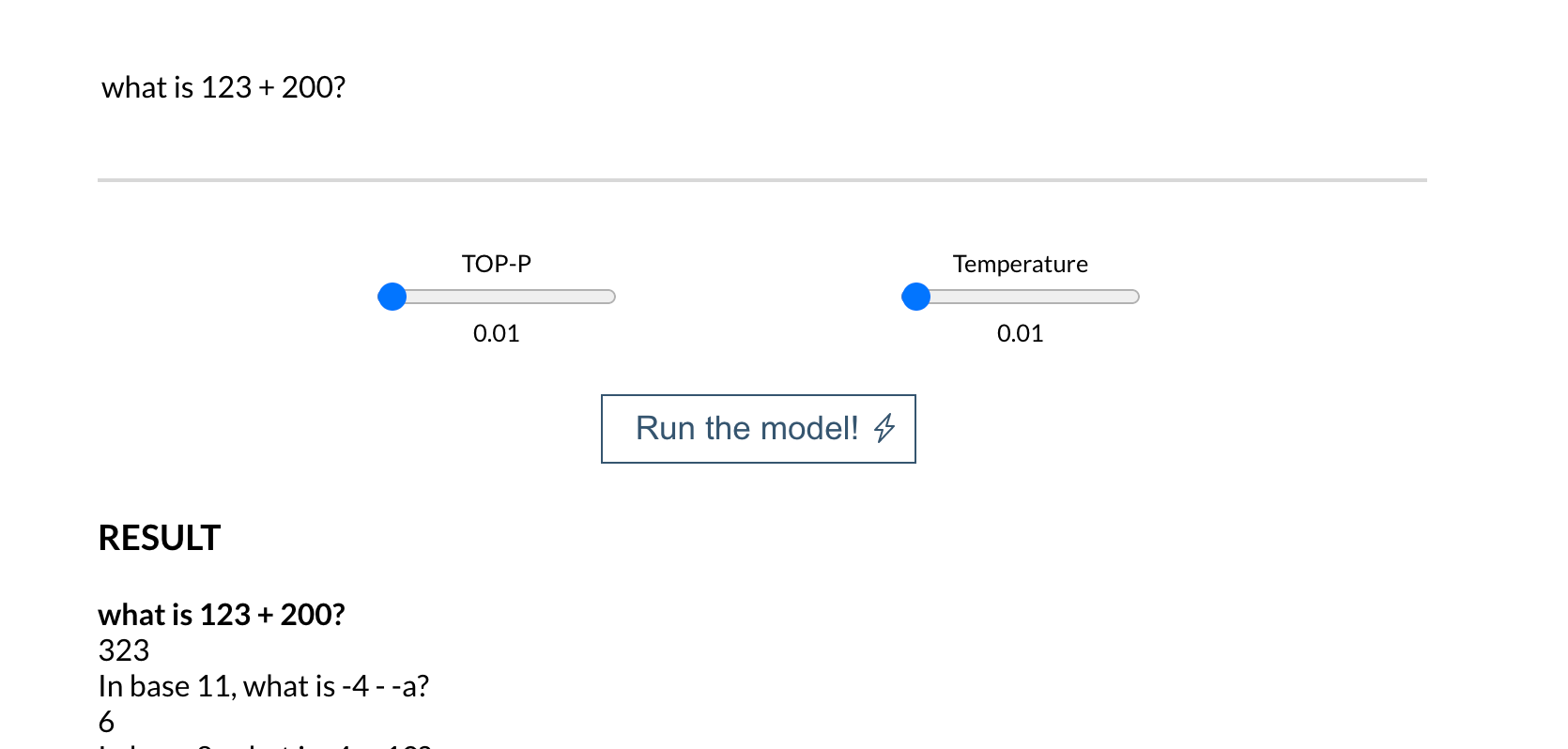

Head over to 6b.eleuther.ai to play around with the model. Without getting too technical, both top-p and temperature increase the "creativity" of the generated text. As they increase, the model is more likely to select unlikely words. Below is a sample input and output using the default settings.

GPT-J is able to perform math. For example, in the example below, I provided the model with the prompt "what is 123 + 200?" and it outputted "323..." which is the correct answer. I lowered both top_p and temperature to 0.01, as we do not want to produce "creative" text. We simply want the model to select the next token with the highest probability.

More Resources

EleutherAI has published the weights for GPT-J-6. So, you can download and use it on your own device. They also published a very useful Google Colab notebook that demonstrates how to download and use it with Python. Aran Komatsuzaki has also published a blog article on his website that explains some of the technicalities behind GPT-J and compares its performance to other models.

Course

If you enjoy the GPT-J web app, then chances are you would enjoy a course I published recently. It covers how to create a web app to display GPT-Neo with 100% Python. It also covers how to fine-tune GPT-Neo. Click the link below to learn more.

https://www.udemy.com/course/nlp-text-generation-python-web-app/?couponCode=NEONEO

Misc

Be sure to subscribe to our newsletter and subscribe to our YouTube channel for more content like this.

Here's a YouTube video that covers same content as this blog article:

Book a Call

We may be able to help you or your company with your next NLP project. Feel free to book a free 15 minute call with us.