Gramformer: Correct Grammar With a Transformer Model

Whether you're writing an email or a blog post – using correct grammar goes a long way. But, built-in grammar tools in programs like Microsoft Word only correct basic mistakes and premium software like Grammarly may be out of reach for some people. Thankfully, a machine learning scientist named Prithiviraj Damodaran trained an open-sourced Transformer model that corrects grammar. He also released a Python package to make it easier to use called Gramformer.

In addition to day-to-day use, Gramformer has the potential to be used for preprocessing data. For example, you could use it to correct the grammar of your training, evaluation and testing data. This may improve the performance of whatever NLP task you're competing, such as text classification. With that said, Gramformer has the potential to not only help you with your own personal writing but also help improve the performance of your NLP systems.

Gramformer – the Python Package

Gramformer is not available on PyPI. But, we can download it from its GitHub repo with the following line of code.

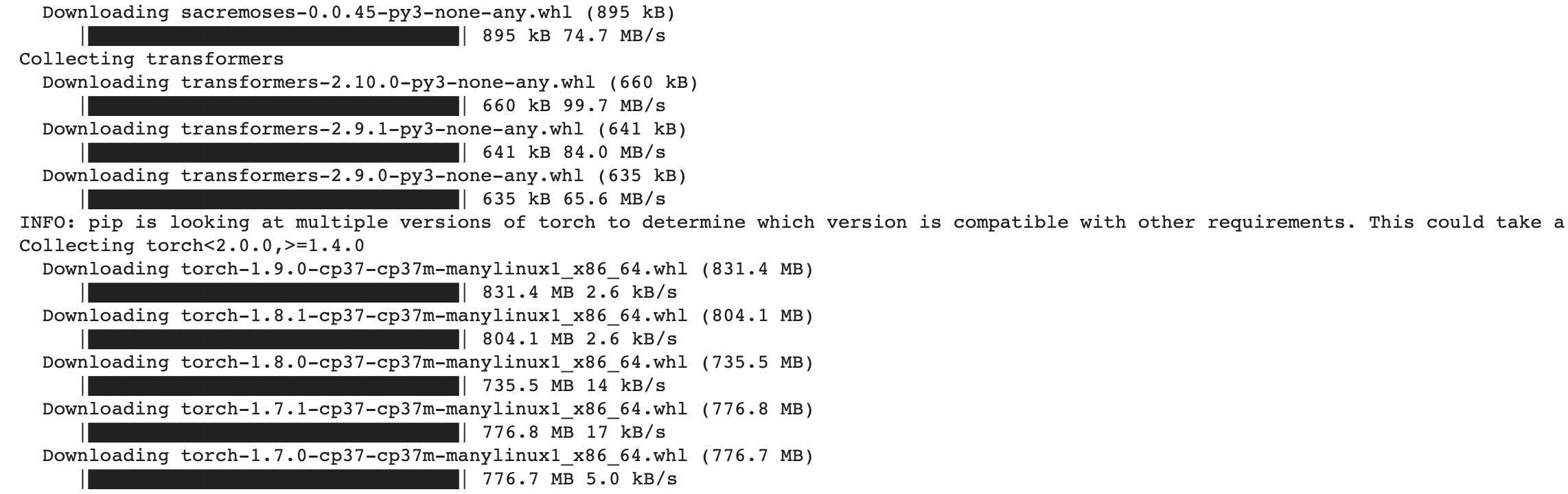

!pip3 install -U git+https://github.com/PrithivirajDamodaran/Gramformer.gitHowever, when I tried to install it within Google Colab, it kept on installing multiple versions of is dependencies, as shown below. It then finally crashed with the message "ERROR: ResolutionImpossible." There's also an open issue where for some people, it installs endlessly. In addition, the package does not provide support for modifying the text generation settings. So, I suggest that we use a more stable package to use the model, and I happen to be the lead maintainer of a Python Package that accomplishes just this called Happy Transformer.

Gramformer With Happy Transformer

Happy Transformer is built on top of Hugging Face's Transformers library to make it easier to use. It allows users to implement and train models for multiple different tasks like text classification, text generation and more with just a few lines of code. It's available on PyPI, so we can download it with just one line of code.

pip install happytransformerThe model we'll be using performs a text-to-text task. Meaning, given a piece of text, it produces a standalone piece of text. Other text-to-text tasks include translation and summarization. So, from Happy Transformer, let's import a class called HappyTextToText.

from happytransformer import HappyTextToTextCurrently, the only stable Gramformer model is called "prithivida/grammar_error_correcter_v1" and is available on Hugging Face's model distribution network. This model is a "T5" model and can be downloaded by creating a HappyTextToText object. The first position parameter for this class is the model type and the second position parameter is for its name.

happy_tt = HappyTextToText("T5", "prithivida/grammar_error_correcter_v1")T5 models are able to perform multiple tasks with a single model. The model accomplishes this by learning the meaning of different prefixes before the input. For example, for some models you can perform summarization by adding "summarize:" and then including text. Then with the same model, you can use the prefix "Translate English to German:." For the Gramformer model we're using, the only prefix we need is "gec:."

Below is text that contains grammar and spelling error that we'll correct using the Gramformer model. Notice how we added the correct prefix.

text = "gec: " + "I likes to eatt. applees"Now, unlike with the Gramformer library, Happy Transformer allows us to modify the text generation settings. In one of my recent courses, I discuss the theory behind several text generation algorithms in regard to text generation models like GPT-Neo and GPT-2. The same theory applies to text-to-text models, and you can sign up for the course here. Below is code on how to instantiate settings for a text generation algorithm called top-k sampling.

from happytransformer import TTSettings

settings = TTSettings(do_sample=True, top_k=10, temperature=0.5, min_length=1, max_length=100)Finally, we can perform grammar correction with just one line of code by calling happy_tt.generate_text(). We'll provide the text to the first position parameter and include the settings for its "args" parameter.

result = happy_tt.generate_text(text, args=settings)

print(result.text)Result: I like to eat apples.

Conclusion

And there we go! We just corrected the grammar and spelling of a text input using a T5 Transformer model. Now, I imagine some of you will use Gramformer for data preprocessing, while others may use it to prevent sending an embarrassing grammatically incorrect email. Regardless of your future applications, I wish you luck and stay happy everyone!

Helpful Links

Text generation course by me (coupon attached)

Subscribe to my YouTube channel for an upcoming video on Gramformer

Colab: code within this article

Support Happy Transformer by giving it a star 🌟🌟🌟