Text Classification With Hugging Face's API

In this article we'll discuss how to implement text classification models using Hugging Face's Inference API. People often struggle with deploying Transformer models as it requires a system with significant compute and backend programming knowledge to implement correctly. With Hugging Face's API, you can just write a few lines of code and allow any device to leverage Transformer models.

Hugging Face's Model Hub allows anyone to upload models for the public to use. I suggest you search to see if someone has uploaded a model that matches your use case before you fine-tune your own. For example, there are several sentiment analysis models that are available. Using a model on the Hugging Face's Model Hub instead of training your own has the potential to save you time and money.

Check out this article to learn how to upload your own model to Hugging Face's Model Hub.

Implementation

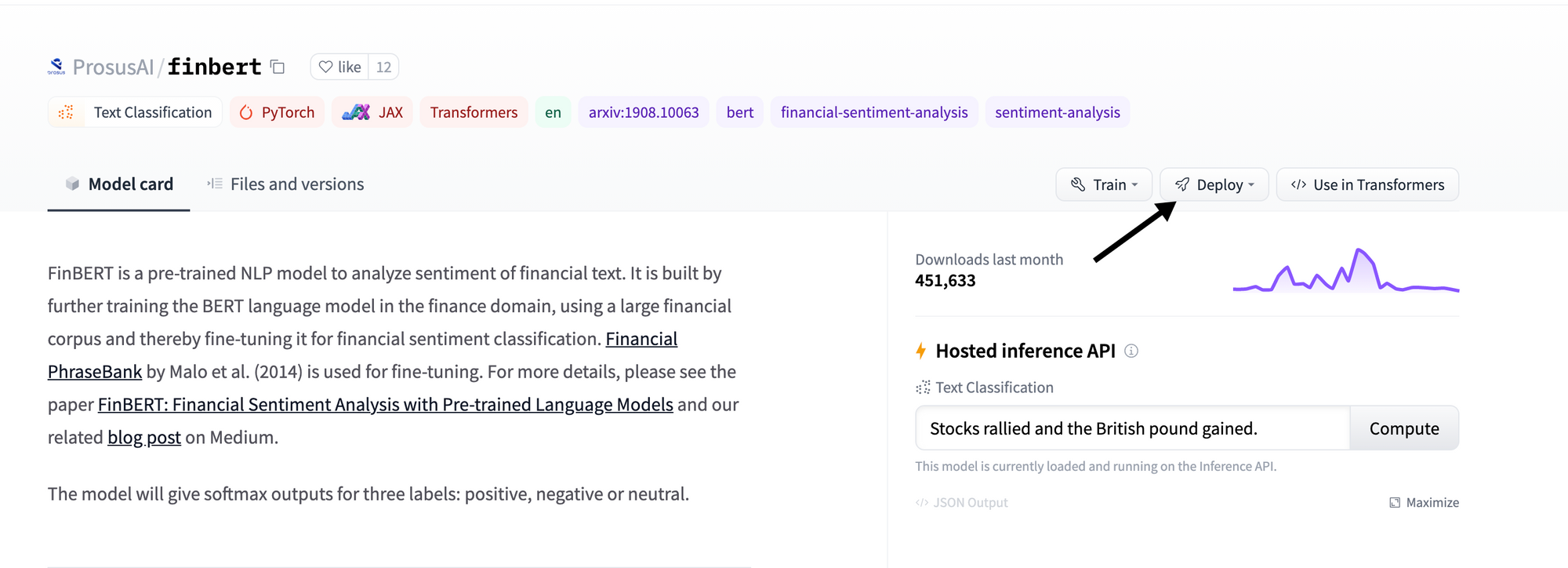

First off, head over to URL to create a Hugging Face account. Then, you can search for text classification by heading over to this web page. For this tutorial, we'll use one of the most downloaded text classification models called FinBERT, which classifies the sentiment of financial text.

After you've navigated to a web page for a model, select "deploy" located in the top right of the page and select "Accelerated Inference."

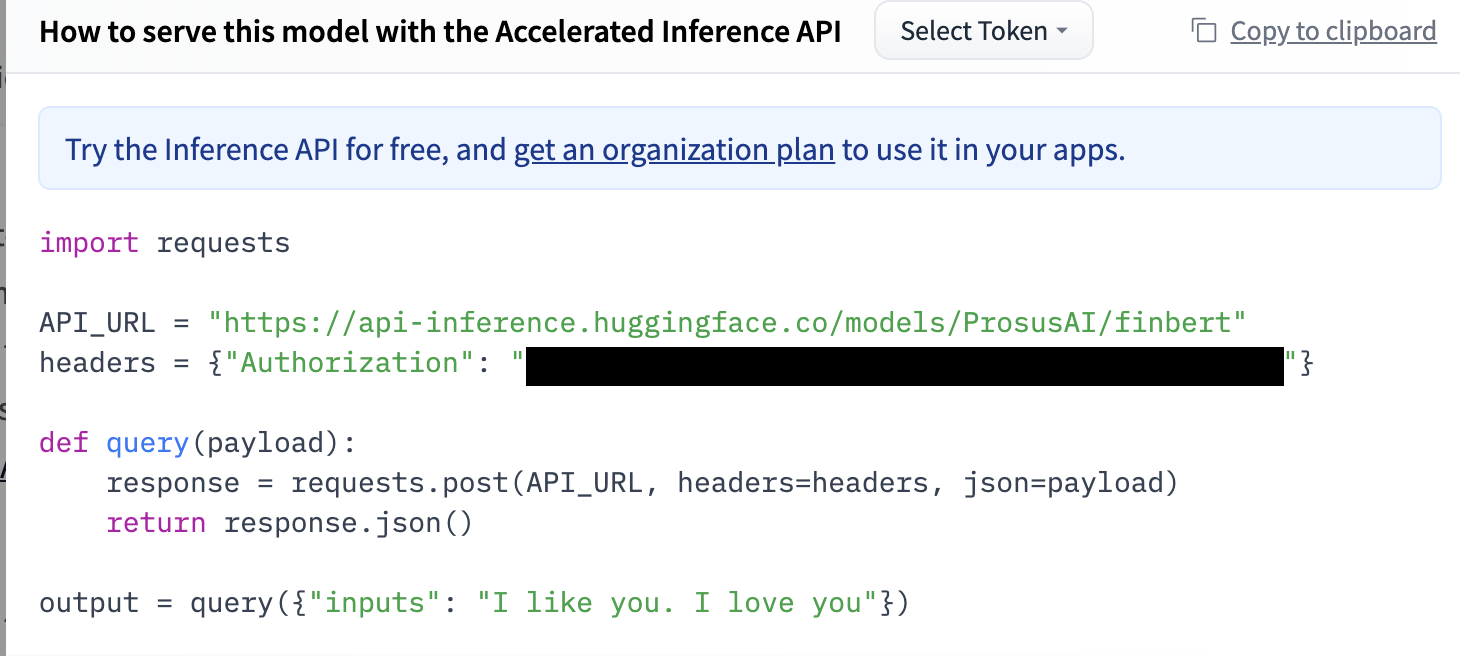

This will then generate code that you can copy into your Python environment. Here's the code that was generated when I performed this process.

The only library we need to install is called "request' which can be accomplished with a simple pip command, as shown below.

pip install requests

Here's the code shown above, except I added comments to help explain it. I also changed the input to be more fitting.

# the package we'll use to send an

# HTTP request to Hugging Face's API import requests

import requests

# A URL to indicate which model we'll use.

#If you visit it, the page displays information on the model.

API_URL = "https://api-inference.huggingface.co/models/ProsusAI/finbert"

# A dictionary that contains our private key.

# Be sure change this to include your own key.

headers = {"Authorization": "PRIVATE"}

# A method that we'll call to run the model

def query(payload):

# Makes a request to Hugging Face's API

response = requests.post(API_URL, headers=headers, json=payload)

return response.json()

output = query("Tesla's stock increased by 20% today!")

print(output)Result: [[{'label': 'positive', 'score': 0.9411582946777344}, {'label': 'negative', 'score': 0.010023470968008041}, {'label': 'neutral', 'score': 0.0488181971013546}]]

The output is a list of lists where each list contains a dictionary with the fields "label" and "score." We can isolate the label and its respected score with the code below.

print("Top label:", output[0][0]["label"])

print("Top score:", output[0][0]["score"])Result:

Top label: positive

Top score: 0.9411582946777344

The score is between 0 and 1 and the sum of all of the scores is 1.

Conclusion

You just learned how to perform text classification with Hugging Face's API. So, no need to set up a server or invest in hardware to leverage your favourite Transformer models. Be sure to subscribe to my mailing list and also to my YouTube channel. Below are some links to related content I created and stay happy everyone!

Text Classification With Happy Transformer

Do you want to run the model on your own hardware instead? Then check out this tutorial on how to implement text classification models using a Python package I created called Happy Transformer that simplifies implementing and training Transformer models.

Text Generation With Hugging Face's API

Here's a link to an article that describes how to use Hugging Face's Inference API to implement GPT-Neo – an open source version of GPT-3.

Course

If you want to learn how to create a web app to display GPT-Neo, and how to fine-tune the smallest 125M model, then I suggest you check out this course I created. I attached a coupon to the link.

Book a Call

We may be able to help you or your company with your next NLP project. Feel free to book a free 15 minute call with us.